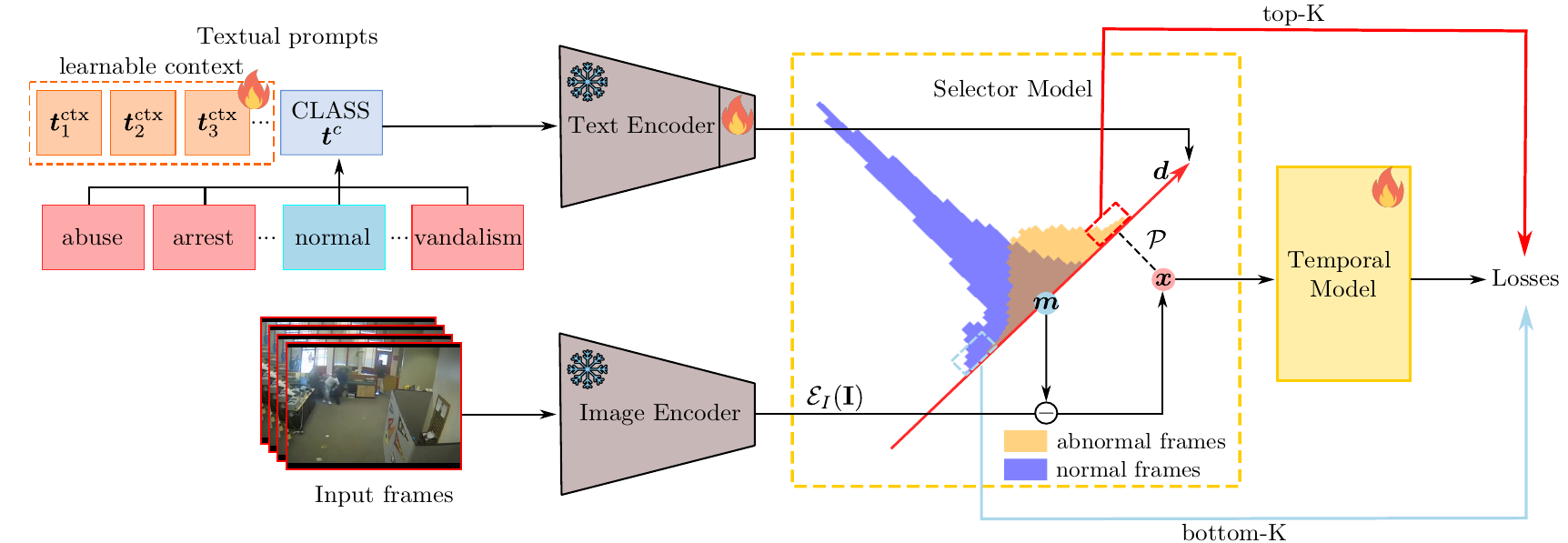

We tackle the complex problem of detecting and recognising anomalies

in surveillance videos at the frame level, utilising only video-

level supervision. We introduce the novel method AnomalyCLIP,

the first to combine Large Language and Vision (LLV) models, such

as CLIP, with multiple instance learning for joint video anomaly

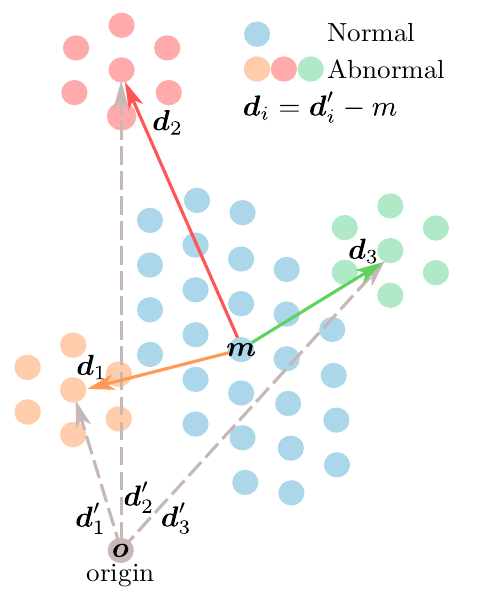

detection and classification. Our approach specifically involves

manipulating the latent CLIP feature space to identify the normal

event subspace, which in turn allows us to effectively learn text-

driven directions for abnormal events. When anomalous frames

are projected onto these directions, they exhibit a large feature

magnitude if they belong to a particular class. We also introduce a

computationally efficient Transformer architecture to model short-

and long-term temporal dependencies between frames, ultimately

producing the final anomaly score and class prediction probabilities.

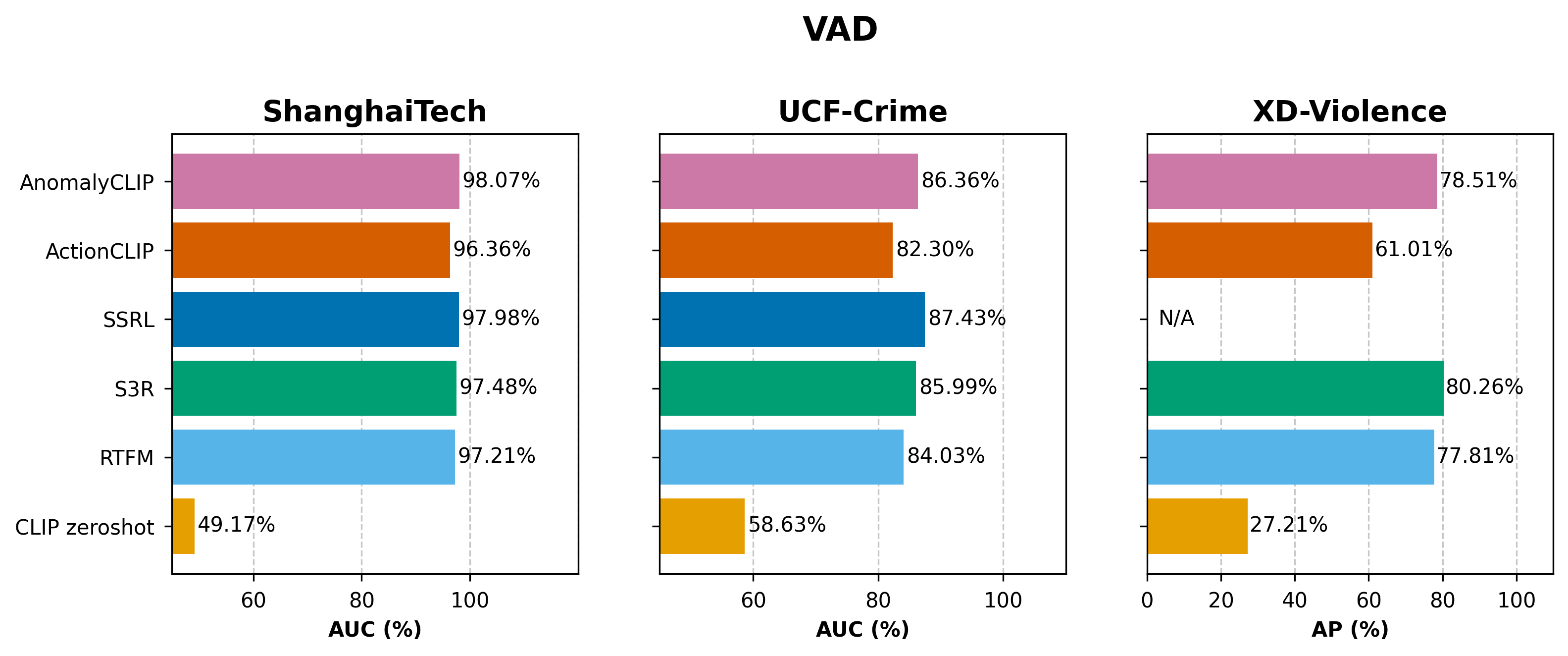

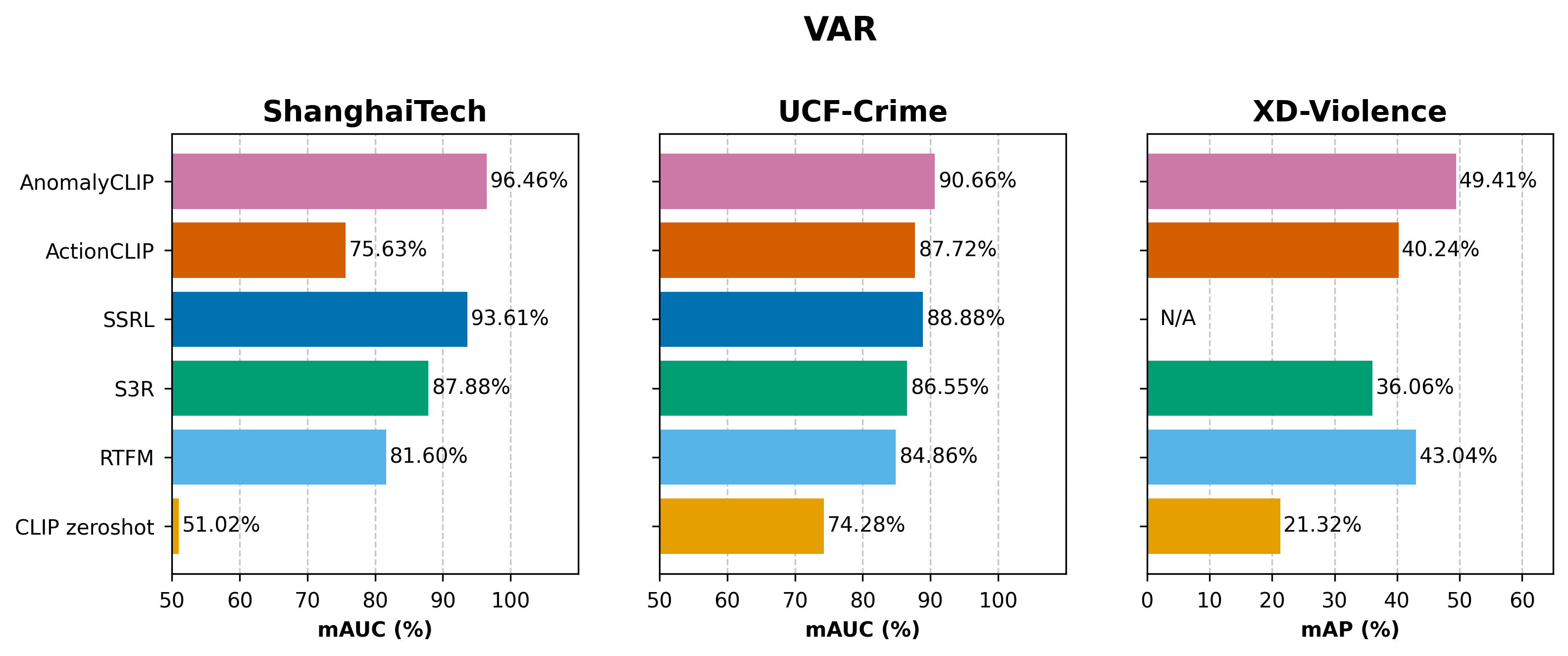

We compare AnomalyCLIP against state-of-the-art methods

considering two major anomaly detection benchmarks, i.e. ShanghaiTech,

UCF-Crime, and XD-Violence, and empirically show that it outperforms

baselines in recognising video anomalies.